The Statistic output in the Query Profile Overview of Snowflake provides detailed insights into the performance of different parts of the query. Specifically, it highlights the "Most expensive nodes," which are the operations or steps within the query execution that consume the most resources, such as CPU and memory. Identifying these nodes helps in pinpointing performance bottlenecks and optimizing query execution by focusing efforts on the most resource-intensive parts of the query.

References:

Snowflake Documentation on Query Profile Overview: It details the components of the profile overview, emphasizing how to interpret the statistics section to improve query performance by understanding which nodes are most resource-intensive.

QUSTION NO: 582

How do secure views compare to non-secure views in Snowflake?

A. Secure views execute slowly compared to non-secure views.

B. Non-secure views are preferred over secure views when sharing data.

C. Secure views are similar to materialized views in that they are the most performant.

D. There are no performance differences between secure and non-secure views.

Answer: D

Secure views and non-secure views in Snowflake are differentiated primarily by their handling of data access and security rather than performance characteristics. A secure view enforces row-level security and ensures that the view definition is hidden from the users. However, in terms of performance, secure views do not inherently execute slower or faster than non-secure views. The performance of both types of views depends more on other factors such as underlying table design, query complexity, and system workload rather than the security features embedded in the views themselves.

References:

Snowflake Documentation on Views: This section provides an overview of both secure and non-secure views, clarifying that the main difference lies in security features rather than performance, thus supporting the assertion that there are no inherent performance differences.

QUSTION NO: 583

When using SnowSQL, which configuration options are required when unloading data from a SQL query run on a local machine? {Select TWO).

A. echo

B. quiet

C. output_file

D. output_format

E. force_put_overwrite

Answer: C, D

When unloading data from SnowSQL (Snowflake's command-line client), to a file on a local machine, you need to specify certain configuration options to determine how and where the data should be outputted. The correct configuration options required are:

C. output_file: This configuration option specifies the file path where the output from the query should be stored. It is essential for directing the results of your SQL query into a local file, rather than just displaying it on the screen.

D. output_format: This option determines the format of the output file (e.g., CSV, JSON, etc.). It is crucial for ensuring that the data is unloaded in a structured format that meets the requirements of downstream processes or systems.

These options are specified in the SnowSQL configuration file or directly in the SnowSQL command line. The configuration file allows users to set defaults and customize their usage of SnowSQL, including output preferences for unloading data.

References:

Snowflake Documentation: SnowSQL (CLI Client) at Snowflake Documentation

Snowflake Documentation: Configuring SnowSQL at Snowflake Documentation

QUSTION NO: 584

How can a Snowflake user post-process the result of SHOW FILE FORMATS?

A. Use the RESULT_SCAN function.

B. Create a CURSOR for the command.

C. Put it in the FROM clause in brackets.

D. Assign the command to RESULTSET.

Answer: A

first run SHOW FILE FORMATS

then SELECT * FROM TABLE(RESULT_SCAN(LAST_QUERY_ID(-1)))

https://docs.snowflake.com/en/sql-reference/functions/result_scan#usage-notes

QUSTION NO: 585

Which file function gives a user or application access to download unstructured data from a Snowflake stage?

A. BUILD_SCOPED_FILE_URL

B. BUILD_STAGE_FILE_URL

C. GET_PRESIGNED_URL

D. GET STAGE LOCATION

Answer: C

The function that provides access to download unstructured data from a Snowflake stage is:

C. GET_PRESIGNED_URL: This function generates a presigned URL for a single file within a stage. The generated URL can be used to directly access or download the file without needing to go through Snowflake. This is particularly useful for unstructured data such as images, videos, or large text files, where direct access via a URL is needed outside of the Snowflake environment.

Example usage:

SELECT GET_PRESIGNED_URL('stage_name', 'file_path');

This function simplifies the process of securely sharing or accessing files stored in Snowflake stages with external systems or users.

References:

QUSTION NO: 586

When should a multi-cluster virtual warehouse be used in Snowflake?

A. When queuing is delaying query execution on the warehouse

B. When there is significant disk spilling shown on the Query Profile

C. When dynamic vertical scaling is being used in the warehouse

D. When there are no concurrent queries running on the warehouse

Answer: A

A multi-cluster virtual warehouse in Snowflake is designed to handle high concurrency and workload demands by allowing multiple clusters of compute resources to operate simultaneously. The correct scenario to use a multi-cluster virtual warehouse is:

A. When queuing is delaying query execution on the warehouse: Multi-cluster warehouses are ideal when the demand for compute resources exceeds the capacity of a single cluster, leading to query queuing. By enabling additional clusters, you can distribute the workload across multiple compute clusters, thereby reducing queuing and improving query performance.

This is especially useful in scenarios with fluctuating workloads or where it's critical to maintain low response times for a large number of concurrent queries.

References:

QUSTION NO: 587

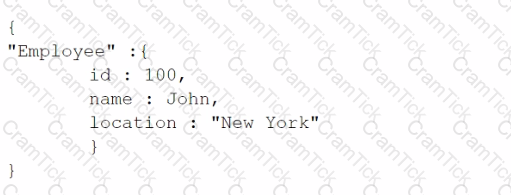

A JSON object is loaded into a column named data using a Snowflake variant datatype. The root node of the object is BIKE. The child attribute for this root node is BIKEID.

Which statement will allow the user to access BIKEID?

A. select data:BIKEID

B. select data.BIKE.BIKEID

C. select data:BIKE.BIKEID

D. select data:BIKE:BIKEID

Answer: C

In Snowflake, when accessing elements within a JSON object stored in a variant column, the correct syntax involves using a colon (:) to navigate the JSON structure. The BIKEID attribute, which is a child of the BIKE root node in the JSON object, is accessed using data:BIKE.BIKEID. This syntax correctly references the path through the JSON object, utilizing the colon for JSON field access and dot notation to traverse the hierarchy within the variant structure.References: Snowflake documentation on accessing semi-structured data, which outlines how to use the colon and dot notations for navigating JSON structures stored in variant columns.

QUSTION NO: 588

Which Snowflake tool is recommended for data batch processing?

A. SnowCD

B. SnowSQL

C. Snowsight

D. The Snowflake API

Answer: B

For data batch processing in Snowflake, the recommended tool is:

B. SnowSQL: SnowSQL is the command-line client for Snowflake. It allows for executing SQL queries, scripts, and managing database objects. It's particularly suitable for batch processing tasks due to its ability to run SQL scripts that can execute multiple commands or queries in sequence, making it ideal for automated or scheduled tasks that require bulk data operations.

SnowSQL provides a flexible and powerful way to interact with Snowflake, supporting operations such as loading and unloading data, executing complex queries, and managing Snowflake objects from the command line or through scripts.

References:

QUSTION NO: 589

How does the Snowflake search optimization service improve query performance?

A. It improves the performance of range searches.

B. It defines different clustering keys on the same source table.

C. It improves the performance of all queries running against a given table.

D. It improves the performance of equality searches.

Answer: D

The Snowflake Search Optimization Service is designed to enhance the performance of specific types of queries on large tables. The correct answer is:

D. It improves the performance of equality searches: The service optimizes the performance of queries that use equality search conditions (e.g., WHERE column = value). It creates and maintains a search index on the table's columns, which significantly speeds up the retrieval of rows based on those equality search conditions.

This optimization is particularly beneficial for large tables where traditional scans might be inefficient for equality searches. By using the Search Optimization Service, Snowflake can leverage the search indexes to quickly locate the rows that match the search criteria without scanning the entire table.

References:

QUSTION NO: 590

What compute resource is used when loading data using Snowpipe?

A. Snowpipe uses virtual warehouses provided by the user.

B. Snowpipe uses an Apache Kafka server for its compute resources.

C. Snowpipe uses compute resources provided by Snowflake.

D. Snowpipe uses cloud platform compute resources provided by the user.

Answer: C

Snowpipe is Snowflake's continuous data ingestion service that allows for loading data as soon as it's available in a cloud storage stage. Snowpipe uses compute resources managed by Snowflake, separate from the virtual warehouses that users create for querying data. This means that Snowpipe operations do not consume the computational credits of user-created virtual warehouses, offering an efficient and cost-effective way to continuously load data into Snowflake.

References:

QUSTION NO: 591

What is one of the characteristics of data shares?

A. Data shares support full DML operations.

B. Data shares work by copying data to consumer accounts.

C. Data shares utilize secure views for sharing view objects.

D. Data shares are cloud agnostic and can cross regions by default.

Answer: C

Data sharing in Snowflake allows for live, read-only access to data across different Snowflake accounts without the need to copy or transfer the data. One of the characteristics of data shares is the ability to use secure views. Secure views are used within data shares to restrict the access of shared data, ensuring that consumers can only see the data that the provider intends to share, thereby preserving privacy and security.

References:

QUSTION NO: 592

Which DDL/DML operation is allowed on an inbound data share?

A. ALTER TA3LE

B. INSERT INTO

C. MERGE

D. SELECT

Answer: D

In Snowflake, an inbound data share refers to the data shared with an account by another account. The only DDL/DML operation allowed on an inbound data share is SELECT. This restriction ensures that the shared data remains read-only for the consuming account, maintaining the integrity and ownership of the data by the sharing account.

References:

QUSTION NO: 593

In Snowflake, the use of federated authentication enables which Single Sign-On (SSO) workflow activities? (Select TWO).

A. Authorizing users

B. Initiating user sessions

C. Logging into Snowflake

D. Logging out of Snowflake

E. Performing role authentication

Answer: B C

Federated authentication in Snowflake allows users to use their organizational credentials to log in to Snowflake, leveraging Single Sign-On (SSO). The key activities enabled by this setup include:

B. Initiating user sessions: Federated authentication streamlines the process of starting a user session in Snowflake by using the existing authentication mechanisms of an organization.

C. Logging into Snowflake: It simplifies the login process, allowing users to authenticate with their organization's identity provider instead of managing separate credentials for Snowflake.

References:

QUSTION NO: 594

A user wants to upload a file to an internal Snowflake stage using a put command.

Which tools and or connectors could be used to execute this command? (Select TWO).

A. SnowCD

B. SnowSQL

C. SQL API

D. Python connector

E. Snowsight worksheets

Answer: B, E

To upload a file to an internal Snowflake stage using a PUT command, you can use:

B. SnowSQL: SnowSQL, the command-line client for Snowflake, supports the PUT command, allowing users to upload files directly to Snowflake stages from their local file systems.

E. Snowsight worksheets: Snowsight, the web interface for Snowflake, provides a user-friendly environment for executing SQL commands, including the PUT command, through its interactive worksheets.

References:

Snowflake Documentation: Loading Data into Snowflake using SnowSQL

Snowflake Documentation: Using Snowsight